Linux Articles

Having just returned from a trip to my local Best Buy, I was posed the question by a friend "Would you rather live in a walled garden, or in a desert?". This can feel like the choice we are forced to make between propretiary technologies and free or standardized technologies.

The context of the question was with the Samsung Galaxy S4 google edition (i9505g) and Miracast. At Google IO 2012, Google announced that Android 4.2 and above would be supporting the wireless display standard known as Miracast. Since then, support has been virtually non-existant. This includes both the Nexus 7 and the Nexus 10, both of which were launched with Android 4.2 (after the announcement), but without miracast.

The context of the question was with the Samsung Galaxy S4 google edition (i9505g) and Miracast. At Google IO 2012, Google announced that Android 4.2 and above would be supporting the wireless display standard known as Miracast. Since then, support has been virtually non-existant. This includes both the Nexus 7 and the Nexus 10, both of which were launched with Android 4.2 (after the announcement), but without miracast.

Since then, Google has launched exactly two devices with Miracast support, the first is the Nexus 4, and the second is the Galaxy S4 Google edition.

Giving it a Try

Searching the internet for Miracast-capable devices results in around 4 devices, which state support for a confusing blur of standards, sometimes ignoring Miracast entirely. They all seem to cost $60+ at this point.

The closest thing that Samsung is pushing hard is the Allshare Cast capability, which all of their TVs and devices are equipped with. This proprietary version of display streaming seems to work very well when you have a handful of samsung devices, but do I want to be forced into using Samsung's standard and only buying their devices? If I wanted to do do that, I could just buy only Apple products and most likely have a superior experience with an iPhone, iPad, and an Apple TV.I went to Best Buy to try out Miracast's compatibility with these devices before making a purchase.

We found 6 TVs that stated support for Wireless Display, and 4 of them even showed up on my SGS4 GE as available, but connecting was flaky. Although I was able to mirror my phone on 2 Samsung TVs, the connection was slow and only lasted around 20 seconds before failing.

Results

Perhaps the Miracast technolgy is too new, it's disappointing that neither the proprietary Android world has adopted it, nor any open source versions. Theoretically Linux or Ubuntu could provide direct support for the streaming standard over Wifi, but to date there seem to be no attempts to do so. The closest was a project to add Miracast XBMC on the raspberry pi.

permalink

Canonical Announces Ubuntu For Phones

by Stephen Fluin 2013.01.02 There is now a fourth player in the smartphone arena (and it's not RIM).

There is now a fourth player in the smartphone arena (and it's not RIM).

Today at 12:00PM CST, Canonical released a video recognizing the past, and announcing the future of Ubuntu. The future as proposed by Canonical's Mark Shuttleworth, is for Ubuntu to be a universal computing platform. Recognizing apps, content, and data as being universal, with customized interfaces for different form factors like Tablets, TVs, Phones, and Desktops.

This news is exciting, because being Linux and open source-based, multiple architecture and multiple device application development will be an easier dream to achieve. This would be a strong message to Apple, Microsoft, and Google that they have failed to unify all of the devices of a user. Ubuntu is a platform that I have used every day of my life for the last 4 years in the desktop space, taking that level of capability, stability, and power to other devices may be a winning combination.

In late 2012, Ubuntu TV was announced as the first extension to the Desktop Ubuntu experience. Shortly thereafter, Ubuntu for Android was announced. Ubuntu 12.10 was heavily optimized for touch and tablet interfaces. Now to complete the specturm, Ubuntu for phones is here.

Major Innovations

The biggest and most exciting accomplishment has been the promotion of web applications as true applications. I've been predicting this from Google's Android for a while, but it looks like Ubuntu may beat them too it. Apps built using HTML5 for iOS and Android will work perfectly on this device.

QML as a native development technology that combines standard application development methodologies, with simplified markup and Javascript and CSS for UI Glue is exciting.

Finally, Ubuntu has been working on intelligent and context-based menus for more than a year now. On Ubuntu phone they are exposing all of these interfaces via phone commands. Surpassing even Google's voice capabilities.

Support for Android-designed hardware is a part of the plan. This is a huge deal and something neither Microsoft or Apple could ever replicate. This means that any Android phone (and there are a ton of great ones) should be able to run Ubuntu. This means that all of the great Android hardware I've acquired should work very well with this new exciting mobile operating system.

Ubuntu's Challenges

The biggest challenge facing them is a lack of expeirence with heavy cloud applications. Historically they have relied on third parties, which could result in a fragmented or broken experience. This is exacerbated by the fact that the major service providers also have their own mobile platforms, so support may be slow coming or completely missing.

As of 1:00PM CST, their app development website is offline due to heavy load. This in interesting sign of the level of interest around developing for this platform.

Currently there's no plans for wearable computing, which will be an area for Google to innovate and easily exceed the capabilities of Apple, Microsoft, and Ubuntu.

permalink

How To Be a Hacker

by Stephen Fluin 2012.12.30There are several things I do when I sit down at someone else's computer for the first time. I start by loading up a web browser, finding and downloading Putty, and remotely I log into my systems. I typically need to do this to dig a DNS server, to port scan a computer, to SSH into another server, or just to look at a file or two on my computer. The most common reaction when I do something like this is one of awe.

People look at this type of terminal connections as the playground of hackers. In reality, using an SSH terminal as the basis for connecting to the world of computing is a very natural extension of many of the advanced computing principles used by most people in the world. I'm thinking about putting together a step by step guide to getting access to a meaningful shell server, and starting to live a life that to the majority of the public, resembles that of an elite hacker. Let me know what you think.

permalink

. This high quality bluetooth headset has very decent audio. I use mine for going to the gym, or just listening to music on my phone while travelling, or even sitting at my desk.

I have a bluetooth adapter that is plugged in an working well with my Kubuntu 12.10 installation. When I plugged in the adapter and paired with the S11 headset, everything went great. I could play audio from my computer on my headset, and the device even mixed in audio from my phone at the same time. The problem came when I tried to listen to music and everything came out low quality.

I went into both the System Settings in KDE as well as installing pavucontrol, but I didn't have any luck in terms of switching the device away from HSP/HFP which is the headset protocol into A2DP, which is the audio protocol. After debugging for quite some time, I realized that the headset is capable of mixing streams, but only by combining these two protocols.

The solution was to unpair my phone (or temporarily turn off bluetooth), and then my computer could select the appropriate profile for high-quality audio streaming.

permalink

Make your website more SPDY

by Stephen Fluin 2012.04.18Google's relentless quest to improve performance for all things technologic continues. They have just hit a huge success with SPDY, their replacement for HTTP for web transactions. Now, with a few simple linux commands, you can download, install, and activate an Apache module that will speed up everything for all of your supporting Chrome and Firefox users. This is a great thing to do because it is one half of the SPDY adoption question, and will help move the entire web forward into a faster, newer generation. Fortunately there is no downside. Users not yet capable of SPDY will imply failover to the slower HTTP transaction.

Install SPDY on Apache by doing the following (assuming a 64 bit system):

That's it, you are done. You will now see Chrome users negotiating with SPDY. YOu can verify this by visiting Chrome Network Internals

permalink

Localize Ubuntu Installs To Your Timezone

by Stephen Fluin 2012.03.14One of the common tasks with a new Ubuntu server setup is to localize the server to a timezone. Timezones are used by the filesystem for timestamps, they are used by PHP to perform date lookups, and by any databases when doing date comparisons based on NOW().

The way GNU/Linux, and Ubuntu in particular store time is by keeping the system clock synchronized to UTC (Universal Coordinated Time), and then storing an offset to the localized timezone. To set this up in Ubuntu, just install the tzdata with the following command:

After running this command and entering your sudo password, you will be prompted for which geographic region you are in, and then prompted for a selection of timezones. After selecting the correct timezone for your server (for me, I like to keep my servers localized to my local time), you will need to restart any of the applications that use this. For a standard webserver, you will need to run the following:

Synchronizing and Updating the Server Time

Over time, the clock will get out of sync with the true passage of time (measured by atomic clocks accross the globe, by our GPS system, and by several publicly available network time pools). Ubuntu makes it very easy to resync your system clock to the network time pool. In stock GNU/Linux, this is achieved by running the ntpdate application and supplying a network time pool. On Ubuntu it's even easier, you can just run sudo ntpdate-debian which will sync your system against the debian-specified time servers.

You can put this command in a crontab to frequently resync your clock. Syncing the clock takes very little network or CPU. My experience shows that depending on the quality of the hardware clock, your system will get a second out of date every few days, or every few weeks.

permalink

MortalPowers - A Time For Change

by Stephen Fluin 2012.02.17Running a website requires numerous 3rd parties that provide a wide variety of services. From a domain name, to hosting, to DNS hosting, to SSL certificates, to email servers, there are a lot of pieces that one can easily forget about once they are set up properly. This is a good thing because if the technology works as originally intended, there should be no maintenance time or cost. Aside from just maintainability, you should take time every few years to re-evaluate the providers you rely on. Are there better, or cheaper alternatives? Do you need the services you are paying for, or do you possibly need additional services that you didn't need when you were smaller? I recently took a look at several of my providers and have decided to make some changes.

Hosting and DNS, From Slicehost to Linode

I used slicehost for a long time. 3 years ago, they were competitively priced, offered a great set of tools for the creation and management of virtual servers. About a year ago, they were puchased by Rackspace. This purchase started a long downhill climb for them. The first issues that I had with them was several repeated bouts of downtime. Second, we started having issues where our servers would suddenly become completely unresponsive. With their purchase by Rackspace, it became clear that they were going to move me to the Rackspace Cloud, which had a worse interface with fewer features compared to the original Slicehost. Lastly, despite having signed up 3 years ago, their prices are the same today as they were back then. I evaluated a few alternatives, such as Prgmr, a fully command-line based bottom-of-the-line VPS service. Although the prices were good, I decided that the lack of DNS hosting, and the need to learn Xen commands was unappealing to me. I also evaluated Linode, and determined that they have full feature-parity with Slicehost, and double the server capabilities for the same price.

Moving to Linode

To move my systems, I created a Linode account started up the smallest available node size. While my node was constructing, I logged into the DNS managers of both Slicehost and Linode side by side, and spent 5 minutes copying all of the records from one to the next. I left the IPs the same until I could get the new server setup. Then, I methodically rsynced several folders between the old server and the new.

- /var/www/

- /etc/apache2/sites-enabled

- /etc/postfix/

- /etc/ssl/

Once I had copied the files, I needed to re-enable the PHP mods I was using, install all of the necessary packages, and transfer the database. From there, I started up apache, checked that everything was good to go, and updated my DNS settings to point to the new server.

Domain Names and SSL - Godaddy to Namecheap

With Godaddy's SOPA support, and a clear profit-centric website, I decided to evaluate other domain name providers. For me, a domain name registrar needs to be as cheap as ICANN allows, provide WHOIS, and preferably just get out of the way of me using their services. I also prefer to have my SSL certificates come from the same provider to save me time.

Moving to Namecheap

Transferring domain names isn't the easiest thing in the world, but it's definitely doable. It will require that you extend any of the domain name purchases for an extra year. In order to minimize the cost of transferring all of my domain names, I settled on a 4 stage plan. Once per quarter, I would transfer the next 1/4th of my domain names based on expiration date. As of February, I have transferred half of my domain names. There are several great articles about how to do this, but the general steps are:

- Generate Authorization Code - See http://help.godaddy.com/article/1685#active

- Unlock the domain names you wish to transfer

- Purchase the transfers on NameCheap

- Enter the Authorization Code

- Make sure to setup the nameservers correctly. These can take up to 72 hours to propagate (more often 5-6 hours).

- Approve the transfer request you receive in email

permalink

Top 5 Nightlies Safe to Run Daily

by Stephen Fluin 2011.09.08Some of us live on the edge. We sacrifice stability to be on the bleeding edge of technology, experiencing new features immediately as they are added. Here are my top 5 pieces of software that are generally safe to update on a daily basis.

Chromium

| Application | Frequency of Breaking | Difficulty of Downgrading | Notes |

|---|---|---|---|

| Chromium Browser | Once Per Quarter | Medium | Upgrading requires only a standard package update, ie sudo apt-get update && sudo apt-get upgrade. Downgrading involves searching the internet for an earlier version, or if you are lucky, finding a copy in your apt cache. |

| CyanogenMod 7.1 | Weekly | High | It seems that nightlies for CyanogenMod don't undergo quality assurance, as occasionally very basic things like phone, internet, or battery usage will become completely broken. |

| Wine* | Never | High | Although not technically a nightly, the unstable releases of Wine are often worth a lack of stability for additional compatibility. Commonly each release of the Wine project seems to take 3 steps forward, and 1 step back, breaking some functionality with each release. |

| Ubuntu | Each Release | Extremely High | Ubuntu Unstable releases tend to be one of the best ways to preview and try out upcoming functionality. I recommend this only for the strong-willed, as attempting to run development versions of major revision changes has around a 30% chance of completely breaking your system, forcing a reinstall. |

| FFmpeg | Never | Medium | FFmpeg is relatively easy to compile and install once you have the source code checked out from their repository. This project is frequently updating with additional codecs as well as improvements to processing speed and compression quality. Downgrading requires checking out an earlier version from source and recompiling. |

permalink

Optimize PNG images with OptiPNG

by Stephen Fluin 2011.08.17PNG is a great free lossless, compressed image format. One thing that may surprise you is that PNGs can actually be further compressed, making the filesize smaller than a normal image application will typically achieve. The good news is there there is a tool called optipng that makes it easy to non-destructively improve the layout of PNG files.

On Ubuntu, install OptiPNG with sudo apt-get install optipng. Once it is installed, you can run it its default mode by typing optipng file.png. If you feel like giving the application lots of time to achieve aggressive compression, you can use the -o flag to indicate additional compression. Compared between the default and -o7, I have found only minimal additional compression that has almost never been worth the additional CPU time.

Below are the results of one such compression attempt:

permalink

Master the Art of SSH Tunneling and Forwarding

by Stephen Fluin 2011.08.16SSH tunneling is the practice of using shell account on various computers and servers to acquire and redirect networking between multiple machines. Becoming more adept at SSH tunneling will make your digital world more portable, and enable you to access targetted machines or content, regardless of what network you or the target are on.

SSH Tunneling is great because it allows fully encrypted communication between two servers, even when the services at either endpoint may not use encryption.

Common Use Cases for SSH Tunneling

- Access services only listening on localhost

- Access router configuration remotely

- Proxy into protected networks

Basic SSH Tunneling Concepts

The first and most important concept to understand around SSH tunneling is that in every communication there is a client and a server. Understanding which machine is which is necessary to understand any of the information below. In general terms the client is the machine that will be initiating the connection. The client machine will not require any incoming permissions. A server in a single ssh connection is the machine running sshd and must be accessible via SSH.

Local Forwarding

Local forwarding is the act of taking a remote port and mapping it to a local port on the client machine. This is the most common type of connection and enables proxy-like functionality. Local Forwarding is accomplished using the parameter -L (Hyphen, then Capital L). Every local forwarding command is going to be of the format -Lclientport:destination:destinationport. In the formatting of the command, client port is the port on the machine that will begin listening on localhost. Destination and destinationport are the IP/hostname and Port from the perspective of the server. After successfully opening an SSH connection with local forwarding, any attempt to open clientport on localhost will result in the remote server opening a connection to destionation:destinationport.

Note: It is important to note that if you wish to open a lower port number (for example 80, 631, 25, etc) on the client, you must have root permissions. Typically this is not a problem, because there is no real need to have the client open on a lower port number.

Note: It is also important to note that this connection will be listening on localhost:clientport. This means that you will not be able to access the SSH forwarding from a second degree client, without additional SSH tunneling. An example of this would be where I have 3 machines. Machines A1 and A2 are on network A, and machine B1 is on network B. In this example, if I use local forwarding to connect from A2 to B1, A1 will not be able to access any tunneling performed by A2.

Remote Forwarding

Remote forwarding is the more difficult to understand, as it opening a port on the server, and forwarding those requests to the local machine. This is generally less useful, but can still accomplish some interesting things. The format for remote forwarding is as follows -Rserverport:localdestination:localdestinationport

. In this case, you must have permissions to open serverport on the server, or tunneling will not succeed. Any requests made to localhost on the serverport in the server environment will be forwarded to localdestination:localdestinationport from the perspective of the client.There are relatively few real world applications of this, but one that I have run into more than once is attempting to run an SSH server on a machine that has no incoming traffic privileges. In this case I use the protected machine as an SSH client to connect to some less secure machine. In the SSH connection, I forward a remote port (2200 is easy to remember) to the local SSH server (localhost:22) with the following command: -R2200:localhost:22. In this confusing example, it swaps the client server roles allows you to SSH into a protected machine, by establishing an initial connection having the protected machine as a SSH client.

SSH Tunneling Examples

For these examples, assume that there are two private networks (A:192.168.0.* and B:10.0.0.*), and one internet site (C:180.0.0.1). Also assume that there is only one publicly accessible IP from each private network (192.168.0.10 and 10.0.0.10). Finally, both of the private networks have a router/modem configured at *.*.0.1.

Access private network A's router

ssh 192.168.0.10 -L8080:192.168.0.1 Once this connection is established, you can pull up the browser and visit http://localhost:8080 and you will be redirected to the private network's modem configuration. This is a great way to remotely manage port forwarding and NAT without opening your router to the internet.

Access CUPS on a computer not yet configured for sharing

ssh 192.168.0.2 -L6310:localhost:631 - Part of the default CUPS printer configuration is that it only listens on localhost, and is not accessible from other machines. This SSH connection will enable you to open your browser to http://localhost:6310/ and access the private CUPS configuration.

Forwarding X sessions

ssh -XYC 192.168.0.10 - Although not technically using tunnelling, the XYC parameters will establish the $DISPLAY variable on the server, and allow you to run X applications on the server. These X applications will then be shown on the client environment. This typically runs very slowly, but uses the server's CPU and Memory and Disk and Network, rather than the client's. I often use this for remotely running partition management, such as gparted.

permalink

Logitech Revue will Break your Google TV

by Stephen Fluin 2011.08.08Recently a preview release of Google TV for the Logitech Revue was released. This preview has some exciting components. This release is based on Android 3.1 and includes the Android Market. This preview leak isn't a hacked version, and doesn't require root or any customization to work. Simply download and unzip the package, place it on the root of a flash drive and restart the Google TV, it will automatically update your device.

Overall, the only improvement provided by the update is that it will allow you to preview the upcoming release. There are many downsides and I recommend you Don't download and install it as there is currently no stable release, and no way to downgrade your device.

You will be able to browse the internet, and see the new new settings. Beyond that, NOTHING works.

permalink

SuperOneClick Can Root Your Vibrant With Froyo

by Stephen Fluin 2011.04.30I was extremely frustrated by the fact that installing Froyo on my Samsung Galaxy S (Vibrant) removed the root of my system. Unfortunately when I did the install, it also broke compatiblity with the existing rooting tools. The good news is that there is a new way to root your Vibrant with Froyo using a tool called SuperOneClick.

SuperOneClick

SuperOneClick is a tool that leverages .NET, so you will need a good Mono install if you are on Linux. I actually recommend borrowing a Windows machine for a smoother experience in rooting your device. SuperOneClick was developped by the XDA Community. It may trigger virus scanners because technically it includes a "fork bomb" designed to hack into Android devices, and I make no warranties that someone hasn't done something malicious, but at the very least, this piece of software successfully rooted my device.

Root your Phone

The following steps should quickly re-root your phone. This works with many different Android devices, and it was the first tool I have found to root The Samsung Galaxy S after the Froyo update.

- Download SuperOneClick 1.9.1 (there may be newer versions since posting, see XDA).

- Unzip the archive and run the application.

- Connect your phone and make sure Debug is On. You can change this in the settings.

- Click on "Root" in SuperOneClick

- You are done!

What to do with Root

Besides installing a custom rom (of which there are no good ones yet for the Samsung Vibrant), I typically recommend you install Titanium Backup, it will backup all of your applications and data. This even includes System applications, which is amazing because there is no other way to backup or migrate that data.

permalink

The biggest shift currently occurring in the software development world is the shift towards application stores and marketplaces. Everyone knows that mobile is currently the new frontier in computing, and we are rapidly moving towards a world where mobile smartphones/tablets outnumber desktop computers. Portable devices will be what everyone is using for everything in 10 years (I believe they will also have the modularity to take on desktop-like qualities when at a "base station" for additional power and bandwidth, but that's a separate thought). This means that the trends and occurrences in mobile will matter greatly, not only on their own, but I also believe these trends are going to make their way back to the desktop and recombine and continue to grow and evolve.

Prediction One: Chrome Web Store supports Android

In short, this means that all of the web applications currently in the chrome web store will become available on Android. This would mean that a company (Flixster for example) could develop an application using HTML that works on desktop computers as well as on mobile. Users could then visit this "application" or website using their computer, or they could install it on the Android phone, and the application would get instant access to location-awareness, notification support, and local storage.

I see these applications being integrated into the Android Marketplace, and users are going to be able to less and less tell the difference between an android application (written in Java using the Android SDK and libraries), and a web application (written in HTML using whatever technology desired, but necessarily using Javascript).

Timing

Google will need to make this move quickly to secure their position as Mobile leader, so I see them announcing this technology at Google IO 2011, which should be happening in May. If they can launch this technology, and quickly follow up with Android 3.0 with full support, this technology should be widespread by 2012. The biggest problem Google and Android are running into is an inability to get manufacturers and carriers to release newer versions of the Android operating system quickly enough. This is evidenced by my phone (which is arguably one of the most advanced Android hardware sets), the Samsung Vibrant, is still only running Android 2.1 (Eclair).

Prediction Two: Windows Live Marketplace for Desktop

In general, Microsoft is great at identifying good technologies, and attempting to recreate them as Microsoft technologies. Microsoft's only entrant into the app store world is currently for their Windows Phone 7, but this will change as the shift to app stores continues. Unix/Linux users have long benefited from a central repository of software (apt repostiories for Debian / Ubuntu, Portage for FreeBSD / Gentoo, yast for Red Hat, pacman for Arch, etc); Microsoft is going to want to capitalize on the capabilities and benefits of these types of systems

Timing

I see them launching this at some point in late 2011 or 2012, probably coinciding with the next version of Windows, whenever that is finally released. Microsoft is slow to catch on to trends like this, but that's something they are always looking to improve and expedite, so we will see how quickly they can capitalize on this idea.

permalink

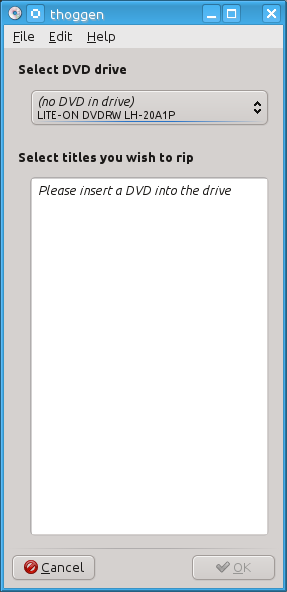

Software Review: Thoggen

by Stephen Fluin 2011.01.01

Thoggen is a tool for making backup copies of physical DVDs, ISOs, or folders using the DVD format. This tool has one of the simplest interfaces I have seen, attempting to take the Gnome philosophy of removing choice and complexity for the benefit of the users.

What Matters in a DVD Backup Tool

For me there are two pieces that really matter in a DVD backup tool, besides the obvious requirement of running on Linux. The first requirement is that I am able to make an encoded copy of my videos. The second requirement is that the output format is portable, usable, and as future-proof as possible. The first requirement eliminates a direct copy from the disk, as well as another tool that I used to use called K9Copy. K9Copy was a great tool, but had very sensitive settings, and I only had about a 30% success rate while using it. In the other cases it would generate unplayable copies of the DVD, that sometimes expanded to 8-10GB (bigger than the original disk!).

The second criteria has to do with the quality of the output. I've read many things about other tools, and I may end up switching to one of them, but Thoggen's biggest strength is that it outputs reasonably sized Ogg Vorbis/Theora encoded video. Ogg Vorbis is a great audio codec that maintains quality while achieving a smaller size than codecs like .mp3. The Ogg Theora and Vorbis codecs are also free in theory. The companies that have produced them have released them with a license that they can never revoke, and that means that anyone can change, use, or distribute them without any restrictions. This is important because the future of .h264 is uncertain, as the licensing bodies controlling it will likely attempt to start charging for it at some point in the future. Ogg Theora isn't as good of a video codec as .h264 in terms of output size, but it's good enough to use without accidentally filling up my harddrive.

I may someday wish to re-encode my videos, so I attempt to save them with "ok" quality that could survive a small bit of further degradation. To achieve this, I always choose Large as my output size, and it use fixed quality and leave it at the default of 50. Doing this outputs a DVD that is about 1.0 GB, 608x320 (depending on cropping), 29.97fps.

Conclusions on Thoggen

Overall, this is a very solid tool that I will be using for the foreseeable future. This tool align's well with my willingness to trade convenience (in the form of 30-40% smaller filesize) for freedom.

permalink

Backup Minecraft maps on Linux

by Stephen Fluin 2010.10.14

Minecraft is one of the best games of 2010. Unfortunately in its current iteration, it only supports a max of 5 saves, and these saves can get pretty big and important, as people are putting hundreds or thousands of hours into their Minecraft creations. In order to support this workflow, it is important to have a backup. It can also be helpful or fun to have incremental backups of the Minecraft map so that you can restore your map to earlier positions, or share progress with friends. My recommended solution is rdiff-backup.

rdiff-backup is a tool that is built on the capabilities or rsync, but rdiff-backup adds incremental storage, and cool abilities such as being able to delete incremental backups older than x days.

Backing up singleplayer Minecraft maps

If you want to backup all of your minecraft settings, you should backup the entire ~/.minecraft folder. If you only want to backup a single save, you will need to know the number of the save and the path will look like ~/.minecraft/saves/World2/.

Backing up a Multiplayer Minecraft server

rdiff-backup is also great for backing up multiplayer servers, especially if the server is hosted remotely. This is because rdiff-backup is based on rsync, which gives it the capabilities to easily connect to other computers over SSH. The downside to backing up remote computers is that both machines must have rdiff-backup installed. If you are using Ubuntu, this is as easy as sudo apt-get install rdiff-backup. I store my remote files on a server called "yt", and in the mcs folder. My world name is left as the default "world", but there is a folder based on your world name that you will need to lookup. Once I have the right paths in mind, I use the following command to make the backup:

permalink

X Forwarding over Multiple SSH Hops

by Stephen Fluin 2010.08.07X11 forwarding (AKA X Forwarding) is a slow but manageable way to run a program remotely, accessing a remote systems disk, memory, CPU, and filesystem, but sending all user interactions and display over the internet to be shown on your computer. X forwarding allows my to run visual diagnostic tools like kdirstat, or even pull up my home photo management program (fspot) without needing to install a local copy, or connect to the remote disk and deal with those complexities.

Typical X Forwarding Use Case

Let's image for a moment you just want to browse the remote filesystem with dolphin.

Step 1 - ssh server01 -XYC The XYC flags will enable X forwarding, compress the communication, and enables "trusted" X Forwarding.

Step 2 - Run your program, for example kdirstat / This command will run kdirstat using the memory, cpu, etc of server01, but the display will be shown, and interact with the mouse and keyboard of the client.

Multiple hop X Forwarding

Unfortunately it's not as easy to chain X11 forwarding as it is to chain normal SSH connections. The workaround is to use SSH tunneling. The general strategy is to create an SSH tunnel which you can open a second SSH connection with.

Using these commands in two terminal windows (the first one will just be a normal SSH connection to server01 that you will need to leave open) will open kdirstat / using the CPU, memory, etc of server02, on the display of the original client, as desired.

permalink

Dell Fully Drops Ubuntu

by Stephen Fluin 2010.07.26Despite Dell's own statements about the quality and security of Linux (Ubuntu in particular), it seems that they have now dropped Ubuntu support from their website. As of now, Dell is no longer selling Ubuntu based machines from their website.

I'm continually astounded by the fact that more people don't use Linux. Economics should dictate that when people want Ubuntu, and it sells well, they increase their offering. The problem with Operating System economics is that there is a huge fear of changing operating systems, making lock-in much worse than with normal market economics. The other piece is that Microsoft has an established monopoly. In order to use the software someone wants, that software has to be built for one or more operating systems. Most people can't switch to a better, higher quality, lower cost solution because their software is built for a specific list of operating systems (GoToMeeting, Adobe Products). This lock-in hurts consumers, and prevents better options from being a choice.

This problem has been alleviated somewhat by web-based software, but the problem continues to this day. This is why it's so saddening that Ubuntu is giving up their Ubuntu offering.

permalink

Connect Multiple Screens with x2vnc

by Stephen Fluin 2010.07.19There are a lot of ways to connect various Linux or Windows machines. Linux has great support for the windows remote desktop protocol (RDP) with rdesktop, but if you have second (or third) screen that can be seen at the same time as your main screen, you should try out x2vnc.

What is X2VNC

X2VNC is a software tool readily available for linux that creates a mapping between a VNC server (on any system type) and an X screen in linux.

Example X2VNC Setup

I have a two screen setup on Kubuntu. I also have a TV screen above and to the left of my two monitors. x2vnc allows me to map the VNC server running on the TV as a third screen on my main system. The first step was to install a vnc server on the computer connected to my TV. The second step was to run x2vnc -west yt:5900, which creates the screen mapping.

permalink

It was allegedly announced that a new photo management tool called Shotwell will be replacing F-Spot in future Ubuntu releases. Whether or not this is true remains to be seen, as the only evidence I have found comes from a slashdot article which refers to various tech blogs.

If it is the case that Shotwell is taking over for F-Spot as the photo management tool, I'm extremely disappointed. I just installed Shotwell to try it out on my computer and was shocked by what I found. After opening Shotwell, I discovered that in traditional Gnome fashion, there are NO OPTIONS, which means you can't configure how the import works, or where you store your collection.

The inability to do any configuration is a huge deal for me because although I store my photos in ~/Pictures, I currently manage them with F-Spot. This means that after I tried out an import with Shotwell, my F-Spot folders were being filled with unmanaged (by F-Spot) pictures. Fortunately Shotwell makes copies of images, rather than moving them, so I was able simply to trash all of the photos in Shotwell to restore my computer to the earlier state. Because there are no configuration options, there is absolutely no way for me to try out Shotwell, or to properly migrate my collection from what I have presently.

The other problem with Shotwell is that it seems very far from complete. To add tags, you have to right click or use the menu to manually "Add Tags" which then allows you to manually type each of the tags you want associated with a photo or a set of photos. This type of interface is clunky and takes users away from the ease of use and visual capabilities that F-Spot has.

Shotwell Recommendation

Perhaps things will get better in the future, but for now, stay very far away from Shotwell, or install it in a virtual machine.

permalink